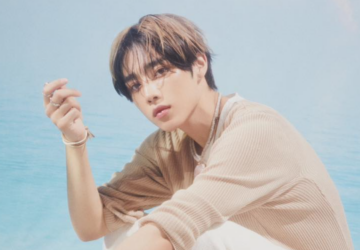

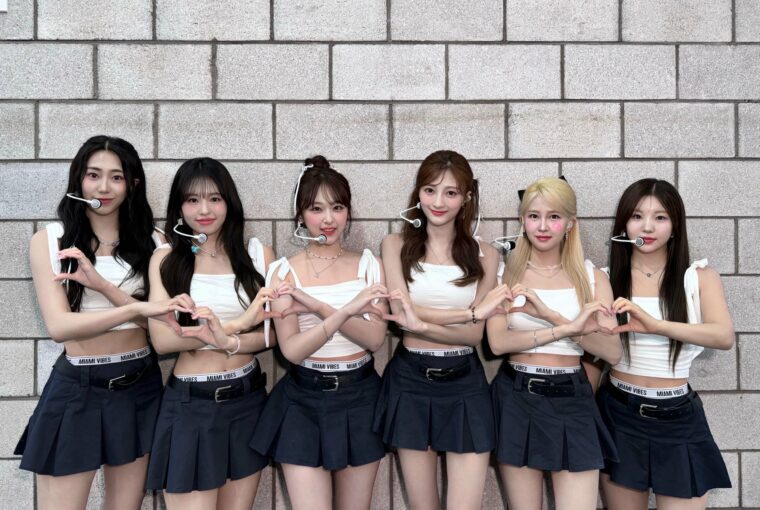

FCENM has announced that they will be taking legal action against the creators and distributors of deepfake (AI-generated) videos involving their artists, ILY:1. The label also expressed deep concern over the potential harm these videos could cause to the group’s reputation and image.

ILY:1 Company FCENM Vows Legal Action Against Deepfake Video Creators

On August 31, FCENM made it clear that they are committed to protecting their artists and will also pursue all available legal avenues to combat the spread of such harmful content.

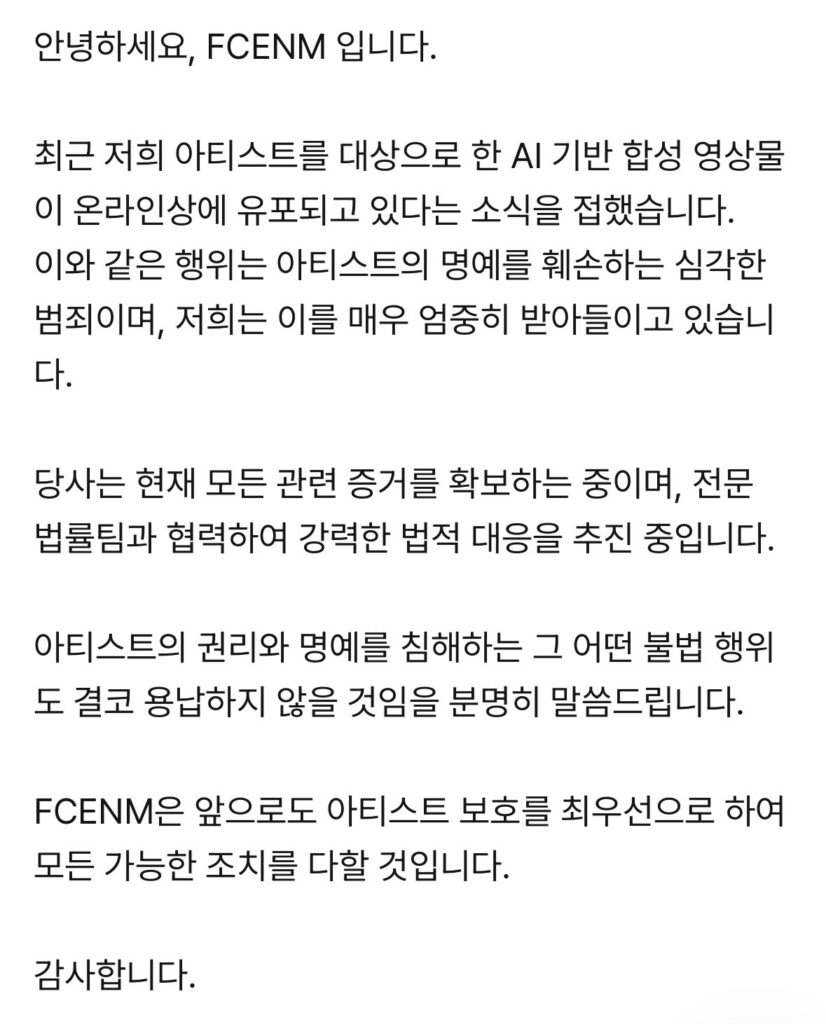

“We have recently heard that AI-based synthetic videos targeting our artists are being distributed online. Such acts are serious crimes that defame the artist’s reputation, and we take this very seriously,” the statement read.

“We are currently gathering all relevant evidence and are working with our professional legal team to pursue strong legal responses. We want to make it clear that we will never tolerate any illegal acts that infringe upon the rights and honor of artists. FCENM will continue to take all possible measures to prioritize the protection of artists,” it continued.

This decision comes in response to the recent alarming trend of deepfake pornography targeting female idols, which has been circulating in various online chatrooms. These chatrooms, which were originally created with the malicious intent of humiliating and degrading women, have also been at the center of widespread public outrage since their exposure earlier this month. The issue first gained attention when similar deepfake content was discovered in school chatrooms, where sexually explicit images and videos of classmates were being shared.

FCENM is now the second entertainment company to publicly announce legal action against the creators of these deepfake videos. JYP Entertainment also took a similar stance to protect the members of TWICE, who were also targeted in these disturbing videos. Both companies’ actions also reflect a growing determination within the industry to safeguard their artists from the harmful effects of such technology.